This blog has moved to https://potomacinstitute.org/publications/crest-blog

Please visit the new location for future updates!

This blog has moved to https://potomacinstitute.org/publications/crest-blog

Please visit the new location for future updates!

It’s time for Silicon Valley and all the other tech giants across commercial industry to stop sticking their nose up at working alongside the federal government, roll up their sleeves, and do some work. S&T innovation as well as our national security are at risk. Industry sees the government as more regulation, shady intrusion, and a cumbersome process to work with, without acknowledging that most of the technology they use daily came directly out of government funded R&D.

If you’re reading this blog on a computer, IPad, or the latest IPhone, you can thank the US Government. Most American’s are probably unaware that the government funded an incredible amount of technology that went into your newest 4K TV and many Apple Products. Not to mention that it was a government agency that gave the world the Internet. Current example, the space industry. Commercial industry is starting to make rockets (Musk, Bezos, etc.) and will soon be offering trips into low earth orbit for private citizens! Who do you think has been researching and exploring low earth orbit and the universe for decades now? Government R&D spurs commercial innovation and that is exactly how it should be, but this is not a one-way street.

The government has not once asked for financial compensation for any of the technology that it has provided to the commercial industry, and nor should it. The government’s role should be to fund basic research and explore new areas of science that may be too risky for the commercial industry to invest in. However, that does not mean that the commercial industry should not assist the federal government. They can provide capital investment into R&D, expertise, or manpower to assist the federal government. The return on investment is real for industry as well. Private-public partnerships, institutional knowledge, breakthrough R&D, and yes, some patriotic “feel good”.

Just this week Jeff Bezos said “this country is in trouble” if big tech companies do not collaborate with the US military. Bezos mentions employee concerns about partnering with the military or the department of defense as a reason that some tech companies are backing out of projects. Project Maven, which was Google’s military drone contract, has been abandoned due to employee backlash. Which is ironic because the technology that led to Google street view, the “Aspen Movie Map”, was funded by none other than the Defense Advanced Research Projects Agency, in 1978.

As near-peer competitors become a bigger threat the time to partner together is now. Commercial innovation has moved well passed that of the federal government and the Department of Defense. The wars we will fight in the future will include a technology space like we have never seen, and we need to ensure that we are on the cutting edge of it. With real partnership and joint research being conducted we could create technological prosperity for decades to come and continue to be the leaders of innovation.

By Alyssa Adcock

The U.S. government must bring antitrust action against Google immediately. This should include (1) requiring Google to make its full search algorithm public and (2) prohibiting it from buying up competitor companies. Google is a monopoly. It has a monopoly on search. It has a monopoly on search advertising. It has restricted innovation, stifling any and all of its competitors. Swift action must be taken to prevent further antitrust violations. A private company should not control the flow of information on the internet.

Google should be required to make its search algorithm fully public and available. Google has been an unopposed search monopoly for almost a decade. Over 90% of all internet searches are conducted through Google platforms. The second most used search engine, Bing, has 2% of the market. A private company cannot control the flow of information; this gives too much power to the company. The U.S. government should require Google to make its search algorithm public and ensure:

– Accessibility: Companies should have unrestricted, full access to the entire algorithm without throttled speeds.

– Transparency: Google cannot restrict or manipulate content on the search index (e.g. moving a search result arbitrarily to a lesser position) and other companies should be allowed to add appropriate content.

– Free or nominal fees: No fees should be required for low-volume users. For high-volume users (e.g. Microsoft’s Bing or DuckDuckGo), Google should be allowed to impose minimal access fees.

Google is restricting innovation through almost exclusive ownership of internet searches. When ownership of a necessary product (in this case, search index) no longer serves the public good, the government has an obligation to step in. In the 1950s, AT&T (then Bell Lab) was a telecommunication monopoly. Following a governmental antitrust lawsuit, the 1956 consent decree required Bell Lab to share its patents with other companies. In the following five years, innovation building off those now free patents increased by 17%. Monopolies suppress technological innovation and competition, both of which drive our economy forward.

Google should be restricted from acquiring competitor companies. After going public in 2004, Google has amassed more than 200 companies including YouTube (the second most visited website in 2019 behind Google.com), Android (the most widely used smartphone operating system worldwide), and DoubleClick (which holds more than 50% of the U.S. market share for advertising platforms). Many of these acquisitions are search start-ups, including the recent 2018 buyout of Tenor, an image search engine. Some tech startups even center their business plan around being acquired by Google – this mentality is the opposite of the spirit of competition. Google has been unrestricted in acquiring its competitors, which limits competition, raises the barrier to new entrepreneurs, and reduces innovation.

Since 2017, Google has already been fined over $8 billion by the European Union for antitrust violations. The U.S. government has fallen behind in regulating Google. Only recently, the U.S. Justice Department announced it would review the practices of online platforms, including Google. This review must find Google in violation of antitrust laws.

Governmental action must to be taken to ensure there is sufficient competition and innovation in the internet search industry – Google must be regulated. Access to online data and the internet must not be limited to only one company.

Our nation’s reliance on space has reached unprecedented levels, and the federal government must have a unified strategy commensurate with its importance.

Since the launch of Sputnik in 1957, the impact of space-based infrastructure has increased year after year. Today, more than ever before, the benefits brought from orbit pervade every level of society: GPS enables over $1 billion of economic activity per day, NOAA satellites collect weather data to warn of upcoming storms and track long term climate data, communications satellites provide worldwide, irreplaceable coverage for both civilian and military operations, and much more. These industries touch on nearly every department, agency, and office of the federal government and impact each American every day.

Despite the crucial role that space plays in every facet of government and commercial operations, the federal government does not have a coordinated National Space Policy. The last policy, issued in 2011 by the Obama Administration, is outdated, and does not sufficiently address all key policy areas. The current administration has taken steps towards a national strategy by outlining several key features that should be included in a national policy, issuing four space policy directives, and reconstituting the National Space Council. Individual agencies have their own stated policies: NASA issued a strategy last year that outlined its plan for the next four years; the Department of Commerce outlined the general goal of promoting commercial space activities in its departmental strategic plan, the Department of Defense and Office of the Director of National Intelligence have enumerated space policy within their own national security plans; and the Department of Transportation has its own National Space Transportation Policy from 2013 and has received further policy direction from Space Policy Directive-2. While these entities have policy strategies for space, there remains no overarching national strategy to tie them together.

The development of a comprehensive, cohesive national strategy is critical and should include key stakeholders from every sector of the national space enterprise. A new National Space Strategy will mitigate the problem of duplicative program efforts, create accountability by designating specific space issues to certain offices, agencies, or departments, and create a coordinated vision throughout the enterprise. Furthermore, development of national strategy can be used as an opportunity to identify key policy issues that remain unresolved and provide clarifying language on each, including which governmental parties are responsible for the various issues and how they are to interface with other parties of interest.

A new National Space Strategy must work toward identifying the large-scale challenges of tomorrow and assign ownership of them today. This will include, but is not limited to: governance of military installations, scientific outposts, and colony settlements; ownership rights of mined resources and the accompanying extraction installations; defense of growing infrastructure in space and corresponding intelligence gathering capabilities; nuclear or solar power for space architectures; the tracking, collection, and repurposing of space debris; regulation of ever-increasing commercial activities both on orbit and for related ground infrastructure; and increasing long-term human presence in space and the necessary biomedical research needed to do so.

The creation of an overarching National Space Policy will allow the United States Government to coordinate the ongoing efforts in space today, while preparing for the continued complexity of tomorrow. Given the critical nature of our dependence on space, it is imperative to create a comprehensive strategy now to guide this future and make way for the many policies that will be required.

By Jared Mondschein, Ph.D.

Firearm homicide rates are 25x higher in the United States than in other high-income nations. Over 30% of mass shootings occur in the United States. These statistics flaunt an unacceptable truth: the United States plays host to too many acts of firearm-related violence. The U.S. is home to the best scientists in the world and they should be unleashed to improve our understanding of the root causes of firearm violence and the impact of the various policy options available to lawmakers. The United States Government should fund a comprehensive research agenda on the causes and consequences of gun violence that would enable evidence-based debates on public policy options.

Following the recent spate of firearm attacks in the United States, influential public figures often espouse positions hinged on pre-conceived beliefs and political values. This behavior marks the continuation of an ongoing pattern in which a violent event captures national attention for a short period of time, draws hyperbolic, inflammatory comments from all sides of the political spectrum, and is then pushed to the recesses of the national consciousness.

Policy is needed to curb gun violence. And decision makers must be provided data in order to create sensible policy. They must be able to “see through the weeds” and ignore superfluous rhetoric devoid of evidence. Unfortunately, this evidence does not exist today. It has not been gathered and the problem has not been studied.

In response to the deaths of 20 children and 6 adults at Sandy Hook Elementary School, then-President Obama issued 23 executive orders directing federal agencies to improve knowledge of the causes of firearm violence, the interventions that might prevent it, and strategies to minimize its public health burden. In response, the Centers for Disease Control (CDC) worked with the National Research Council to develop a research agenda focusing on the public health aspects of firearm-related violence. The ensuing CDC report called for research efforts that aimed to enhance our understanding of firearm violence, including the factors that influence the probability of firearm violence, the impact of behavioral interventions and gun safety technology, and the influence of video games and the media.

The CDC has not received sufficient funding to carry out peer-reviewed scientific studies on the topics described above. In 2018, House appropriators rejected a proposal to earmark $10 million for the Centers for Disease Control to sponsor competitive grants supporting gun violence research (a figure that tallies no more than 0.09% of the FY2018 CDC budget). In general, federal agencies are significantly discouraged from funding research investigating the causes and possible solutions of gun violence.

The United States Government should fund a comprehensive research agenda on the causes and consequences of gun violence.This research program should take an interdisciplinary approach that examines the impact of individual characteristics, family history, the local community, firearm availability, and national or international influences. The program should utilize a public health approach that involves three elements: (1) a focus on prevention (2) a focus on scientific methodology to identify risk and patterns, and (3) a collaboration between mental health experts, epidemiologists, sociologists, and behavioral scientists, among other experts.

It is crucial that the United States solve the problem of gun violence. The evidence that emerges from the study of gun violence causes and consequences must be used directly in effective policymaking.

Dr. Jared Mondschein is a Research Associate at the Potomac Institute for Policy Studies, where he works with government agencies to provide non-partisan evidence-based policy recommendations on a range of science and technology policy issues. He has expertise in renewable energy, innovation, and microelectronics.

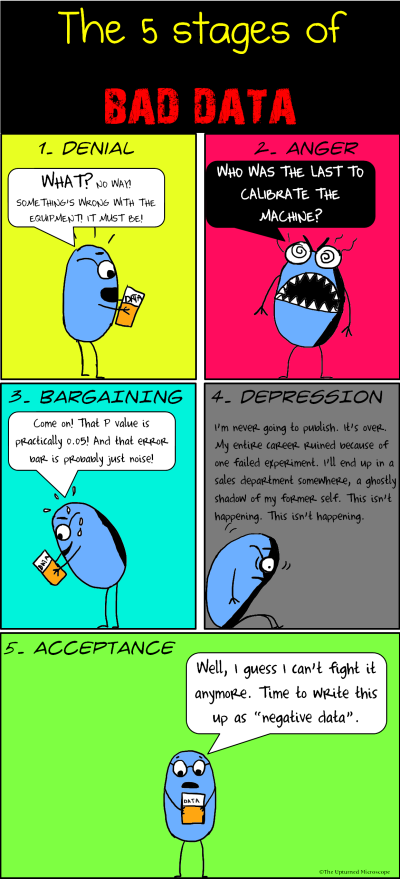

Albert Einstein once said “Failure is success in progress.” Winston Churchill agreed, saying, “success is stumbling from failure to failure with no loss of enthusiasm.” But too often in academic science, a result that fails to support the tested hypothesis is discarded, never to be shared or further investigated. This is disastrous for scientific and technological progress.

Science is meant to be transparent. For science and society to progress, all scientific studies should be published to the broader community. But this doesn’t happen. This failure and inability to publish negative results is detrimental to scientific progress and should be immediately fixed.

Most academic scientists need a high publication output with a high citation rate to receive competitive grants that fund their research and enable promotions. This “publish or perish” culture in academia forces researchers to produce “publishable” results. And for a result to be “publishable”, it most likely has to be a positive result that supports the tested hypothesis. Studies that produce negative results often end up buried in the lab’s archive, never to see the light of day.

Retrieved from: https://theupturnedmicroscope.com/comic/the-5-stages-of-bad-data/

But most science is the production of negative results. Not publishing these results wastes U.S. taxpayer money, as federally funded scientists may be unknowingly repeating the same “failed” experiments as previous studies. The efficiency of scientific progression would drastically improve if negative results were published.

This bias against negative results has a big impact on scientists, especially young scientists in graduate school. Instead of being viewed in a positive light, negative results are often associated with flawed or poorly designed studies and are therefore viewed as a negative reflection on the scientist. The inability to publish negative results threatens to stunt not only the progression of science, but the United States’ ability to train the next generation of scientists.

Recommendation

The U.S. Congress should add language to the NSF Authorization and Appropriation that requires the NSF to annually show that 50% of all NSF funding resulted in validated negative results or results that invalidated previously accepted science.

America is on the verge of a new industrial revolution powered by biology that is being killed by outdated policies.

By using recent advancements in genetic engineering, scientists are repurposing life in completely new ways. We are designing biosensors that monitor our ecosystems, sweeter strawberries with a longer shelf life and goats that produce stronger-than-steel spider silk. Such remarkable engineering is possible due to advancements like low-cost/high efficiency gene editing using CRISPR, low-cost genome sequencing and rapid improvements in gene synthesis.

But existing policies for gene-editing technology and its products are woefully outdated and create a regulatory environment that kills innovation. For starters, the regulatory process is undertaken in a cumbersome environment that splits oversight between the FDA, USDA and EPA. Next, products of genetic engineering are regulated with unfounded fears, and not data.

Take the example of hornless cattle. Farmers desire the hornless trait as horns are a danger to other cattle and farm workers. Hornless cattle bred for beef naturally exist due to a spontaneous mutation. However, dairy cows have horns and naturally breeding them to become hornless is completely impractical. Using gene-editing technology, scientists developed hornless dairy cows, but they never went commercial as they were killed by FDA regulations based on outdated fears rather than science and data.

The costs associated with this regulatory environment have been enormous. For instance, the regulatory compliance costs to take a new biotech crop to market between 2008 and 2012 was roughly $36 million. This burdensome, costly and inflexible regulatory environment has stifled market competition and innovation. We must unleash American innovation in biotech by fixing the regulatory system to account for recent advancements in genetic engineering.

I propose that Congress enacts new legislation for gene-editing biotechnology with the following framework: (a) stipulate that policies asses the outcomes of gene-editing rather than process itself, and (b) establish a new agency that oversees gene-editing technology.

New laws must be agnostic to the process of genetic engineering. Regulations should assess new functions that arise out of gene-editing, rather than process that led to it. Policies must be flexible to account for the differences within products and the extent of regulation necessary for each.

Enforcement of new policies and oversight of genetic engineering and its products should be conducted by a new federal agency. This agency would review applications for new products, assess the scope of necessary regulatory compliance, and coordinate with other federal agencies if necessary. This system would streamline the regulatory process for businesses, who would not have to coordinate independently with multiple federal agencies.

Our children’s education is vital. And we are on the cusp of a pedagogical revolution, an upending of traditional instruction. We must invest now to keep education lock-step with technological progress.

Automation, machine learning, and artificial intelligence may be serving up the greatest challenge we have ever faced when it comes to education. As these technologies displace jobs at faster and faster rates, we’ll increasingly need a workforce that’s adaptable. We need people who are not just ready for some of tomorrow’s jobs. We need people who are ready for any of tomorrow’s jobs. We need a population that can learn new skills incredibly quickly and can perform complex problem solving across multiple domains.

Fortunately, the same forces disrupting the labor market can be harnessed to disrupt our educational system. Machine learning and artificial intelligence can assist in creating a generalized and flexible curriculum that trains a population of thinkers who can seamlessly transition between careers.

The technology is here, but in its infancy. MATHia is a machine-learning tool that aims to personalize tutoring. It collects data on students’ math progress, provides tailored instruction, and helps students understand the fundamental aspects of mathematical problem solving. Intelligent Tutoring Systems can assist in human-machine dialogue helpful in learning new languages.

These are admirable approaches, but they lack the much-needed problem-solving punch to train truly adaptable individuals across many domains. They fail to tap into what truly makes for effective teaching. A consensus report from the National Academy of Sciences (NAS) states that mentorship in the form of continuous and personalized feedback is key to effective learning. This is a far cry from the current state of education, wherein students are taught in large classrooms and assessed for rote knowledge on standardized exams.

According to the NAS, “accomplished teachers…reflect on what goes on in the classroom and modify their teaching plans accordingly. By reflecting on and evaluating one’s own practices…teachers develop ways to change and improve their practices.”

Thankfully, continuous reflection and improvement are the bread and butter of machine learning algorithms. AI will therefore be adept at delivering personalized feedback to every single student. This feedback, in turn, will provide students with the cognitive toolbox to transfer knowledge between a litany of different subjects.

The current lack of knowledge transfer is at the crux of today’s workforce debates: arguments are abundant on how to “reskill” workers displaced by automation. This is important. But the reskilling debate is nothing new, and it’s only one piece of the puzzle. We must also focus resources on creating a workforce that needs less reskilling. It’s a workforce that can adjust to new labor demands in the blink of an eye. We must begin early, in primary and secondary education.

In December 2017, the House introduced the “FUTURE of Artificial Intelligence Act.” Dead on arrival, it had only one small provision addressing education. This act must be resurfaced, and it must give AI in education its due. As the technology landscape changes, so too will the labor landscape. Education must evolve to meet this need.

I know everything you did over the last two years. I know where you went, who you were with and everything you thought about, down to each second of the day. I know this because I hacked into the brain-computer interface (BCI) that records your memories and stores all of your thoughts. To make matters worse, you weren’t even aware that most of this data was collected by your BCI.

The technology to read minds is already in the lab and will soon be commercially available. The broader policy debate we have today on privacy and security issues related to data must include BCIs and the neural data they generate. In fact, there is an urgent need to do this.

Neural data is a unique biometric marker, like fingerprints and DNA, that can accurately identify unique individuals. What is worrisome is that biometric data privacy is mostly non-existent in the United States. Often, it is collected without consent or knowledge. For instance, people living in 47 states can be identified through images taken without their consent, using facial recognition software.

As neural data is increasingly incorporated into each person’s biometric profile, any notion of privacy will go flying out the window. In contrast to other biometric markers that mostly describe physical characteristics, neural data can give precise insight into the most intimate details of our minds. Allowing this information to become an engine for profit threatens our fundamental right to privacy.

To remedy this issue, I propose that Congress enact a Neural Data Privacy Act (NDPA).

The central premise of NDPA is that individuals must have a fundamental right to cognitive liberty. This means that people must be free to use or refuse BCIs without fear of discrimination and consent is always required for the collection of any neural data. Furthermore, strict limitations will be imposed on the type of neural data that can be collected and for what purposes. For instance, businesses and employers will be prohibited from profiting off neural data by selling or leasing it to third parties.

As this legislation will establish a fundamental right to cognitive liberty, a violation of this right will result in severe penalties. Language criminalizing invasion of cognitive liberty will be included in the NDPA, with a mandate for law enforcement agencies to enforce it. We can define violations of cognitive liberty under a few broad categories: accessing neural data without consent, distributing neural data without consent and compelling an individual to use of a BCI against their will.

Instantaneously, your brain power increases by an order of magnitude. Previously difficult problems are now trivial. Tip-of-the-tongue moments are a thing of the past. All manner of intellectual and creative pursuits are at your fingertips.

This is the new reality with the Brain Cloud.

When brain-computer interfaces are the new normal, we’ll prosper from the selective advantages of both silicon and biology.

Think of the electrical grid. If I install solar panels outside my house that provide more energy than I need, that energy flows back into the grid. Then, I’m provided an energy credit toward my next bill. I’ve made the investment in something that society can harness, and I’m repaid for that investment.

Take another example: SETI@home allows you to loan out the processing power of your computer to analyze data for the Search for Extraterrestrial Intelligence. When you’re not using the computer, it’s still providing something useful to society.

Enter the Brain Cloud. When I’m asleep, let’s say, I’ll be able to loan a portion my brain’s processing power to the grid through a brain-computer interface. I can do this because much of my brain is actually a back-up system, a sort of biological insurance policy. Case studies have shown that some individuals are born with only half a brain, only portions of their cortex, or no cerebellum at all. Yet, astonishingly, they lead relatively normal lives.

Of course, the beauty of the Brain Cloud is that no one has to permanently give up portions of their brain. Instead, processing power is out on loan only temporarily.

At this point, a reasonable person might be wondering: Why would I do that?

Just like with the electrical grid, there’s much to be gained. Each time I put my brain on loan, a portion of the processing power I lend out will be used to mine for cryptocurrency through a blockchain. I’ll receive compensation for putting processing power in the grid, and others will be able to harness that power when they need it.

The blockchain will serve another purpose. It will keep an exact, private, and non-refutable ledger of how much processing power I’ve loaned. While anyone will be able to observe that a transaction occurred in the blockchain, no party will have access to the contents of that transaction – allowing me to keep the contents of my brain private.

We are all perpetually hamstrung by our lack of brain power. Yet, for the processing they do, brains are fantastically efficient. Processing in computers, on the other hand, requires massive amounts of energy. Most of this energy ends up as heat, rather than the actual computational processes we want in the first place.

By moving processing power through a brain-computer interface grid, we would be selecting for the best of both worlds: super-efficient conduction of signal through machines, and super-efficient processing of signal through brains.

It’s a win-win.